IASLonline NetArt: Theory

Thomas Dreher

History of Computer Art

VI. Net Art: Networks, Participation, Hypertext

VI.3 Net Art in the Web

- VI.3.1 Web: Hypertext, Protocols, Browsers

- VI.3.2 HTML Art

- VI.3.3 Browser Art

- VI.3.4 Net Art, Context Art and Media Activism

- Illustrations Part IX: Net Art: PPT / PDF

- Table of Contents

- Bibliography

- Previous Chapter

- Next Chapter

In the first half of the nineties a number of developments were crucial for the evolution from the internet to the web. These developments yielded prerequisites for net art.

Until 1993 Gopher and the web were competing internet systems. When the University of Minnesota decided to introduce an annual fee for the Gopher software then the CERN (Conseil Européen pour la Recherche Nucléaire) in Geneve released their competing WEB Software as public domain software: The internet participants chose the open web software. Open Source became a fundamental condition for a far reaching distribution of the web.

A consequence of the developments facilitating the access to the internet, the surfing and the setting up of a website – the web browsers and the definition of web standards (protocols) – was a sharp increase of internet participants in the nineties. In 1993-94 the developments from the internet to the web culminated in the web browser "Mosaic", the formation of the W3 (WWW) Consortium for the definition of standards and the reports in newspapers and journals on the growing number of participants from 2,63 millions in 1990 to 9,99 millions in 1993. In December 1995 the number grew to 15 millions. In June 1993 130 sites were stored on servers. Two years later pages of 23.500 sites could be called up online. 1

A proposition for a new project provided the impulse for a chain of developments resulting in the web: In 12th November 1990 Tim Berners-Lee and Robert Cailliau presented in "World Wide Web: Proposal for a HyperText Project" the plan for a web constituted by linked hypertext documents to be stored by the European Organization for Nuclear Research on several servers of the CERN:

HyperText is a way to link and access information of various kinds as a web of nodes in which the user can browse at will. It provides a single user-interface to large classes of information (reports, notes, data-bases, computer documentation and on-line help). We propose a simple scheme incorporating servers already available at CERN.

Berners-Lee and Cailliau suggested that the implementation of simple browsers on "the user´s workstations" provides accesses to the "Hypertext world". Furthermore applications were planned enabling web participants to add documents. 2 This and the definition of protocols as binding guidelines for networks between components of different types 3 constituted a framework for the construction of a network between the CERN´s various servers: The Web arose from a project of the European research center.

From 1990 to 1991 the Web Browser WorldWideWeb (December 1990), the first version of the Hypertext Transfer Protocol (HTTP version 0.9, 1991, see below) and the tags of the Hypertext Markup Language (HTML tags, 1991, see below) were developed at the CERN. 4

Berners-Lee, Tim: Browser WorldWideWeb, 1990. Screenshot of a NeXT Computer, CERN.

The browser "WorldWideWeb" was a means to store and open files in formats (PostScript, films, sound files) supported by the NeXT system (for computers made by NeXT). Files stored on FTP- and HTTP-servers could be called up with "WorldWideWeb". The browser contained a WYSIWYG (What You See Is What You Get) editor usable to open pages in separate windows, to edit and to link them. If web participants wanted to control presentations of the browser then they had to define the properties of "basic style sheets" in using the "style editor".

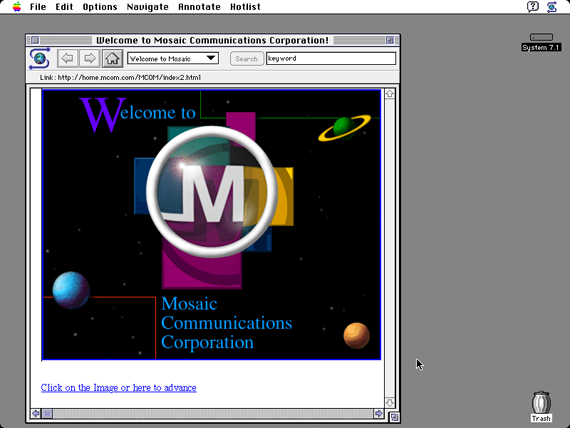

Pei-Yuan Wei was inspired by HyperCard when he developed the browser "Viola WWW". In 1992 he presented the finished version for Unix´s X Windowing System. In 1993 Marc Andreessen and Eric Bina offered "Mosaic" as a browser easy to install on the operating systems Windows, Mac OS and Commodore Amiga. "Mosaic" became the most used browser followed already at the end of 1994 by Andreessen´s "Netscape Navigator". These are steps of the prehistory leading to the "browser war" between Netscape and Microsoft. In 1998 the last one won the competition with the "Internet Explorer". 5

Andreessen, Marc/Bina, Eric: Browser NCSA Mosaic 1.0, 1993. Screenshot of an Apple Computer with the operating system Mac OS 7.1.

Technical standards are the precondition of the internet´s data traffic. These standards are defined by protocols. The File Transfer Protocol (FTP) was already used in the ARPANET since the seventies as a part of the TCP/IP (Transmission Control Protocol/Internet Protocol) family of internet protocols and defines now the technical standards for the uploading of files to servers. 6

For the Open Systems Interconnection (OSI) Reference Model the International Organization for Standardization (ISO) defines since 1983 the functions of seven layers, from the physical layer to the application layer. The fourth layer defines the segmentation of the data stream and the avoidance of the traffic congestion: The TCP determines the function of the transport layer and offers a uniform technical basis for the upper application-oriented layers (from the fifth to the seventh layer). These layers are liberated by the flow control of the transport layer (the fourth layer) from the transport tasks controling the physical connection (the first layer), the transmission between nodes (the second layer) and the routing to the destination layer (the third layer). For the transmission with different systems of networking and telecommunication the transport layer (the fourth layer) organises the segmentation of data packets so that the application-oriented layers (from the fifth to the seventh layer) process only byte streams similar to a computer´s data transfer of a file from a hard disk or from a storage medium to the working memory. 7

The seven layers of the OSI reference model (Yao: OSI 2011).

The data transfer between computers is regulated by the Hypertext Transfer Protocol. It was defined in 1996 by the W3 Consortium and the Internet Task Force (IETF) in HTTP V 1.0. When the computer of a web participant starts a request then the Transmission Control Protocol establishes a connection to an HTTP server via a port (usually Port 80) and finishes this process with either an error message or a connection. 8

The Uniform Resource Identifier (URI) consists of a locator (URL), marking the location of the computer storing the HTML document to be found, and the name (URN) of this file. 9

Since 30th September 1998 the Domain Name System (DNS) is coordinated by the Internet Corporation for Assigned Names and Numbers (ICANN). 10 The URL addresses consist of letters and are stored and managed in a big database. The DNS system coordinates the URL addresses with the IP addresses constituted by ten digits. The IP addresses are the basic elements of the TCP/IP standards. The providers´ DNS servers receive automatically the actual informations being necessary for the coordination of URL addresses with IP addresses. The DNS servers´ translations from the established URL addresses to the IP addresses offer opportunities for censorship: By this intervention not only specific webpages but all contents of a website are blocked. 11

The source code with commands for browsers to present webpages is a further component of the web. The "Standardized Generalized Markup Language" (SGML) was the basis of the format that was used in documents at CERN (SGMLguid). In 1991 Tim Berners-Lee defined in "HTML Tags" 20 HTML elements: Many of them were influenced by SGMLguid. 12 In November 1995 Tim Berners-Lee and Dan Connolly determined the first official standard HTML 2.0. In this document HTML is described within point 3 as "an application of SGML". 13 The tags between angle brackets as marks for commands and the oblique strokes for the ends of commands reoccur from SGML to HTML – in Tim Berners-Lee´s own words:

SGML was being used on CERN´s IBM machines with a particular set of tags that were enclosed in angle brackets, so HTML used the same tags wherever possible. 14

HTML and its extension to XHTML 15 became the standard types for documents to be presented in web browsers. Film, image and sound files can be integrated into these document types. 16 Net artists thematise since 1995 HTML in web projects (see chap. VI.3.2) and problematise since 1997 the browser presentations of documents and links (see chap. VI.3.3).

Tim Berners-Lee wrote on his browser/editor "WorldWideWeb":

I never intended HTML source code...to be seen by users...But the human readability of HTML was an unexpected boon. To my surprise, people [at the CERN] quickly became familiar with the tags and started writing their own HTML documents directly. 17

It is easy to learn to operate with the HTML code. This facilitates the construction of web pages in writing the source code. It is not necessary to write the sign combinations for links, anchors and other commands, because they can be called up per mouse click with easy to use and freely downloadable editors. If editors offer simple to use interfaces as work surfaces hiding the source codes then they can cause traces in the source code demonstrating the user´s inability to control the code. The source codes presented by the browsers show the traces of editors as these include, for example, unnecessary code elements or copyright informations of the programming firm.

In comparison to the hyperfictions for CD-ROMS (see chap. VI.2.2) early web projects by artists expose new scopes as results of the possibilities to control the browser presentations of webpages via their source codes. These include functional and graphical elements like cells, frames and layers as well as possibilities to integrate files stored on distant servers into one webpage. These codes include affordances to observers to explore the functions embedded in browser presentations and to reconstruct their programming. With this open relation between code and presentation the web projects presented below contradict the "dictatorship of the beautiful appearance" 18 determined by the "Graphical User Interfaces" (GUI) shown on the screens of personal computers: The browsers include possibilities to call up the source code and editors are means to modificate it in contrast to code hiding interfaces with buttons for clicks activating functions. The internet in times of the World Wide Web provokes doubts about the achievements of the personal computers with their desktops and possibilities to produce documents not only in a simplified manner but in a manner predetermined by the programmers of the GUI.

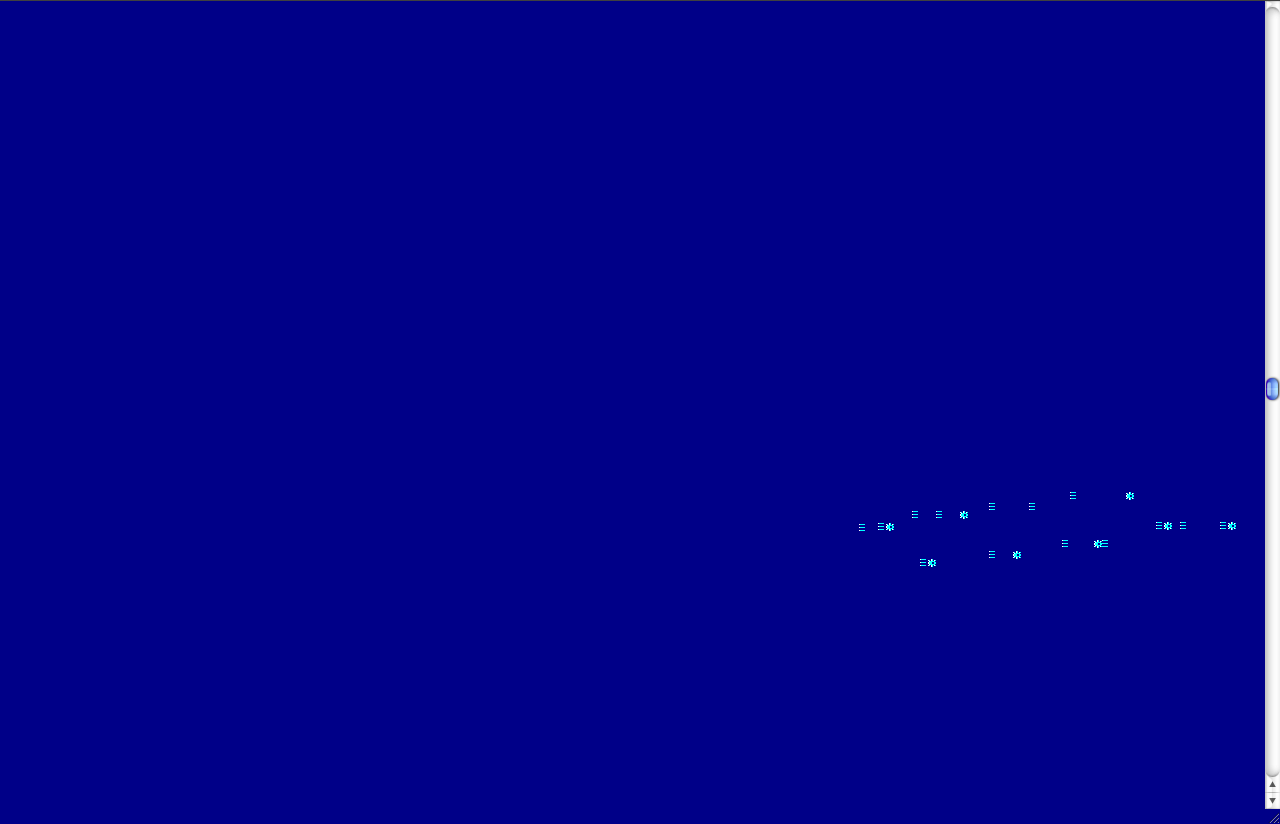

Friese, Holger: unendlich, fast..., 1995, web project (screenshot 2010).

Holger Friese´s "unendlich, fast..."/"nearly infinite..." (1995) consists of a browser field with a nearly complete blue surface. In the source code bgcolor="#000088", the RGB value for "Navy/low blue", determines the colour and its extension is organized by repetitions of the command <br>, the code for line breaks. In scrolling the blue plane in the browser up and down two white signs can be found several times repeated within a narrow field: There are stars and three lines with equal length arranged parallel above each other. These signs can be called up neither as signs of the alphabet nor as keys on manuals. Into the blue plane Friese integrated a screenshot of a postscript file (file name: "ende.gif"). He writes on this screenshot:

And that´s the true reason why the background is blue, it is a screenshot of a Postscript file (the data structure that´s sent to a laserprinter to draw a lemniscate) which had a blue background on a very old DOS operated computer. 19

The signs constitute "a lying eight, the sign for infinity, in a form readable by computers." 20 The white signs of the image file appear on the monochrome plane isolated and subtracted from their former context. The "infinite" blue apears only "nearly" infinite, as the title says, because it is interrupted by these white signs and has a finite height and width.

Jodi: wwwwwwwww.jodi.org, 1995, web project (screenshot 2012).

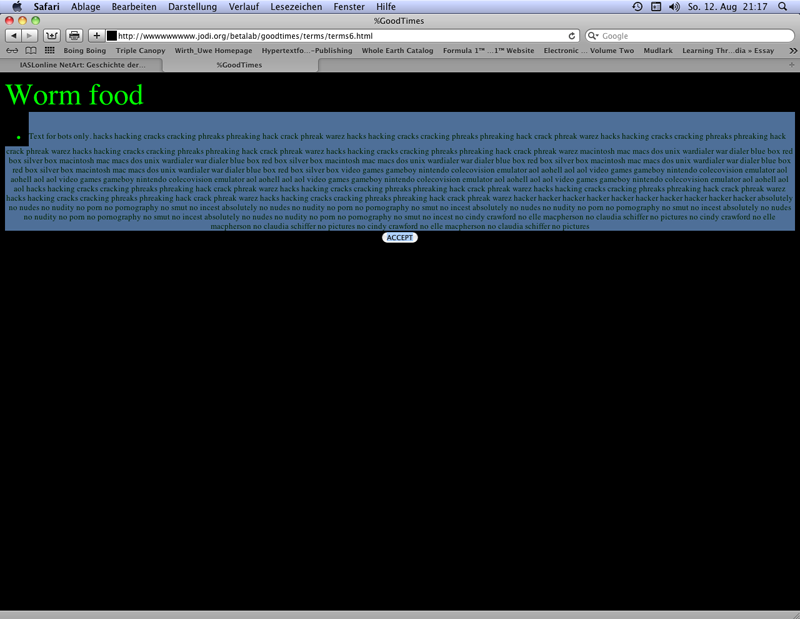

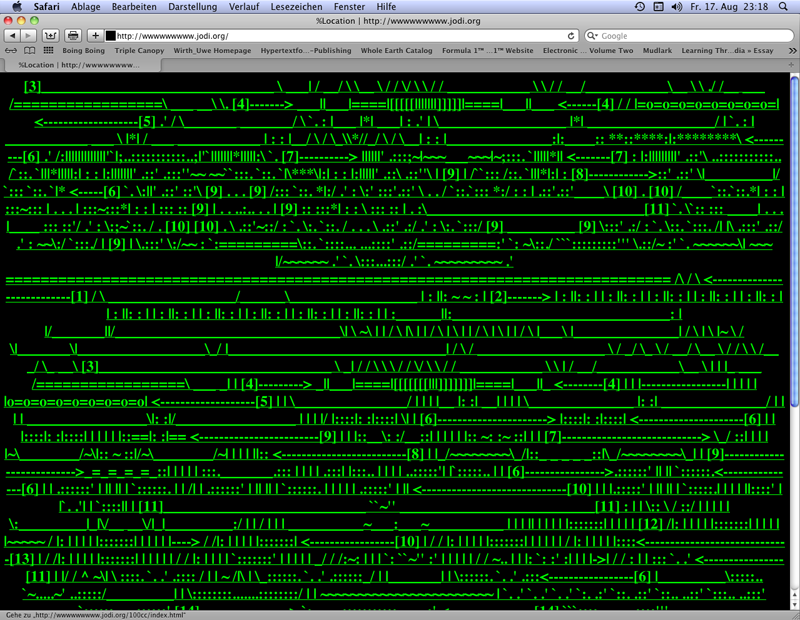

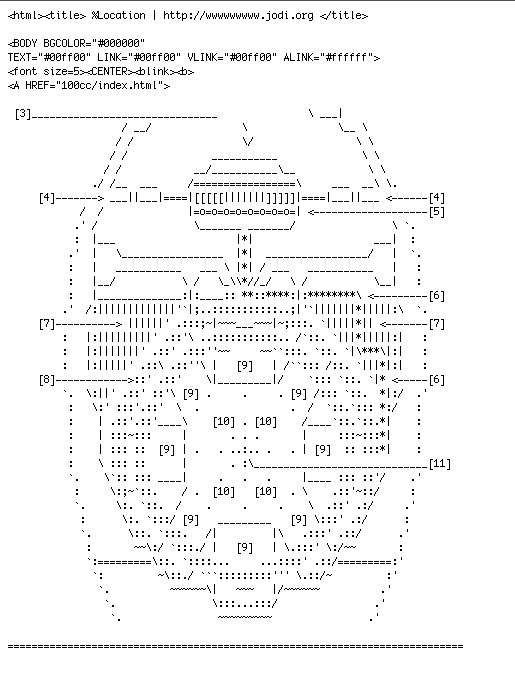

Jodi (Joan Heemskerk and Dirk Paesmans) connect in wwwwwwwww.jodi.org (1995) graphically unusual designed webpages containing some text elements with links often being recognisable only via cursor movements. Many pages present repeated images. Some of the images or image series contain links opening new images. The images are only seldom made with a digital camera. More often two-dimensional computer graphics are presented, and sometimes animated.gifs are shown. The HTML code is used to call up the same stored images several times within a webpage. Text elements can be components of the images as well as parts of the HTML document. HTML functions like <blink> or javascript like the "function scrollit" (automated scrolling) as well as photo sequences in animated gifs are means to control the `moving´ monitor presentations of the webpages. Some links are designed via the tag <form action> as buttons with the forms of formulars.

Jodi: wwwwwwwww.jodi.org, 1995, web project: text becoming visible after being marked by mouse-over (screenshot 2012).

"Accept" buttons are located under "agreement" declarations parodying copyright regulations and disclaimers. The remark "Texts for bots only" can be found in the source code of a page whose browser presentation shows nothing more than the text "Worm food" with an "accept" botton located below. The source code includes word sequences like "hackcrackphreakwarez" and hints to the culture of sharing open content ("warez") and the hacker scene. If someone moves the cursor over the black field between the "Worm Food" headline and the "accept" button then he can read the text of the source code in black letters on blue background as a part of the browser presentation.

Jodi: wwwwwwwww.jodi.org, 1995, web project: browser presentation of the source code written in ASCII (screenshot 2012).

The first page presents in some browsers a source code in ASCII flashing (not all browsers `blink´). ASCII is an abbreviation for "American Standard Code for Information Interchange" substituting letters by number combinations. Platforms for ASCII Art collect and store typograms created with ASCII elements forming patterns sometimes looking either like diagrams or sometimes like pictures. Jodi uses the browser to dissolve the configuration of ASCII elements with a figurative contour on the level of the source code into an irritating sequence of signs: lines, dashes, points and cyphers in repeating sequences and variations. The whole field of this code presentation contains a link leading to another page of this web project.

Jodi: wwwwwwwww.jodi.org, 1995, web project: a detail of the first page´s source code (browser presentation, Screenshot 2012).

If these webpages and the relations between them refer to a common concept then it is the variation of forms, not seldom irritating because of the overall impression of repleteness. Jodi´s manner to explore the possibilities to design webpages must have been a provocation for observers interested in contemporary web design. 21

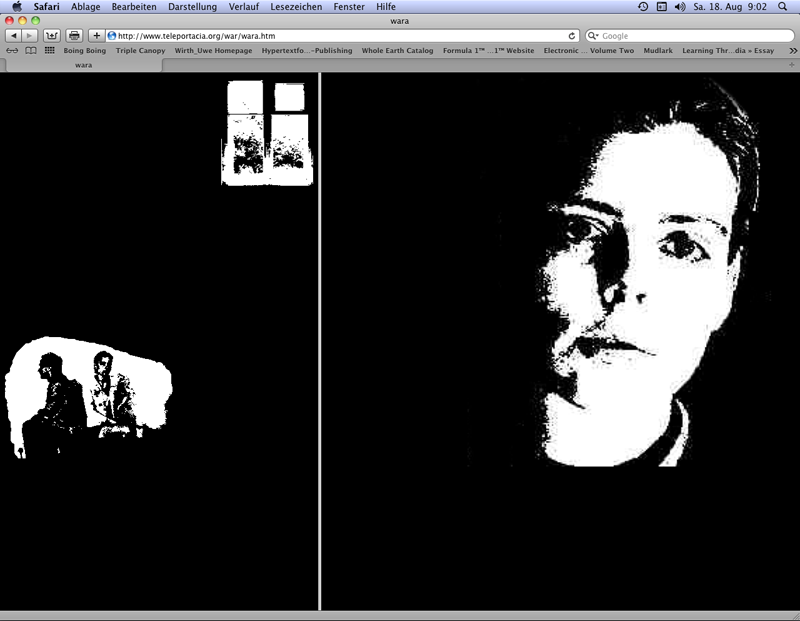

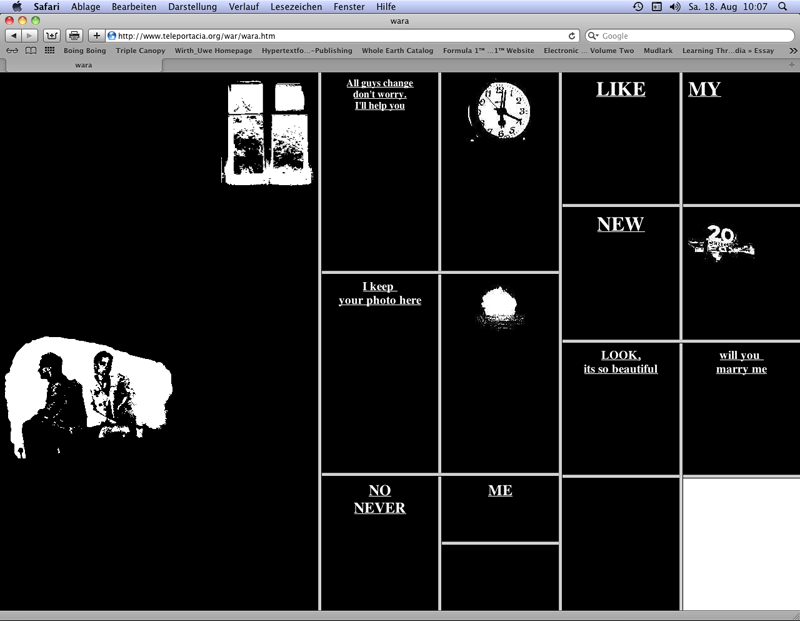

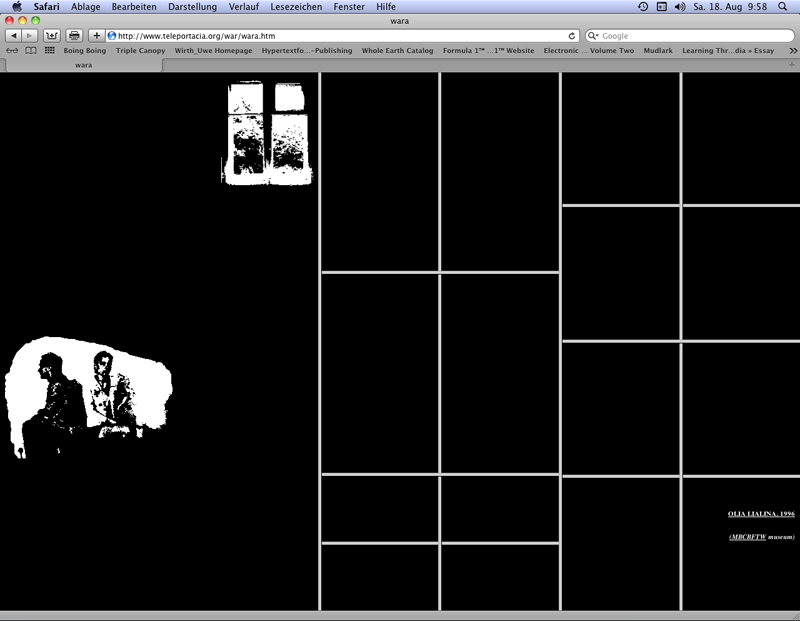

In "My boyfriend came back from the war" (1996) Olia Lialina contitutes a hyperfiction in concatenating webpages via frames (without scrollbars). The frames enclose words, word combinations or sentences. Only a few frames include images (without text), in one case also an animated gif. The frames are divided up into `frames in frames´: In clicking on texts or images within the frames links are activated causing the opening of new pages. In the meantime the webpage is divided into further frames.

Lialina, Olia: My boyfriend came back from the war, 1996, web project (screenshot 2012).

In comparison to Douglas Carl Engelbart´s predigital model of notched cards stringed together edge-to-edge (see chap. VI.2.1 with ann.12) the cards or the contents of the frames in "My boyfriend came back from the war" are digitally set `into motion´: from adjacent card edges to a grid constituted by grey frames whose contents on black backgrounds become `mobile´. The notches are substituted by Lialina´s selection of links on fields within frames opening further frames within linked fields.

Lialina, Olia: My boyfriend came back from the war, 1996, web project (screenshot 2012).

At the beginning the first frame fills the screen over the entire height and contains an image of a window at the top right as well as an image of a couple at the lower left. 22 After a click on the first frame´s couple appears on the right side a second frame with a front view of Lialina´s face. The left frame includes no further leading concatenation, meanwhile the right frame is divided from click to click in further frames with texts and images. Clicks on one of these frames cause at first changes in the frame content (images or texts) and then a division of the frame in two or four further frames. The end of the click sequences on frames causing their divisions is marked by monochrome black fields as frame contents. At the lower right appears not a further black field but instead a white frame presenting – as the source code tells – the text "LOOK, it´s so beautiful" in white letters on a white blackground. The text became visible in the browser Netscape 4 by mouse over for a short time. Lialina wrote to Roberto Simanowski about this presentation: "It was made invisible to be an invisible link. You can see it if you select it." 23 A click on this white frame leads to a frame with a mailto-function to Lialina´s e-mail address, and – in the actual version (2012) – on a line under the mailto-function to a link leading to the platform "Last Real Net Art Museum" offering copies, variations and alternatives to Lialina´s "My Boyfriend came back from the war" being programmed by artists from 1998 to 2012.

Lialina, Olia: My boyfriend came back from the war, 1996, web project (screenshot 2012).

The history of a woman wanting to marry a soldier is laid out by Lialina in a multi-branched but nevertheless sequential manner from left to right and from top to bottom. Words in several adjacent frames point to narrative interrelationships or yield parts of sentences being dissolved in further clicks.

The artist matches her narrative strategy with the permutational possibilities of the frame combinations: The frame permutations and the combinations of sentence fragments are coupled. Lialina uses a frame-hypertext narrative strategy resulting in possibilities to play with semantically occupied fields provoking readers to follow the prearranged narrative direction. 24

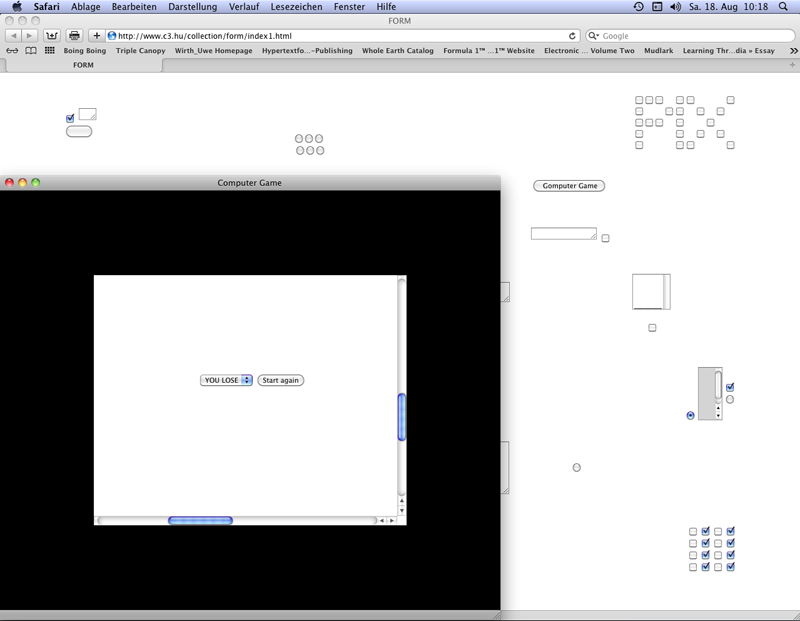

Shulgin, Alexei: Form Art, 1997, web project (screenshot 2012).

Source codes built for purposes are showcased by Alexei Shulgin purposeless in browser presentations. The title "Form Art" (1997) recalls the HTML command for web forms (<form>). Shulgin utilizes input fields, control bottons and checkboxes in a HTML art augmented by Javascript and Java. These elements are distributed on webpages. Clicks on the control bottons and checkboxes open new browser windows demonstrating again constellations with input fields, control boxes and checkboxes. 25 In "Form Art" the forms are not used to send data to a server for further processing but to activate functions of the artistic project´s webpages like a marquee constituted by checkboxes.

The examples presented above are the results of experiments with the possibilities of programming browser presentations with HTML: The relevant browsers were Netscape Navigator 1 through 3 and Internet Explorer 1 through 3. The web projects presented below use uncommon link strategies to thematise the internet as a developing public archive.

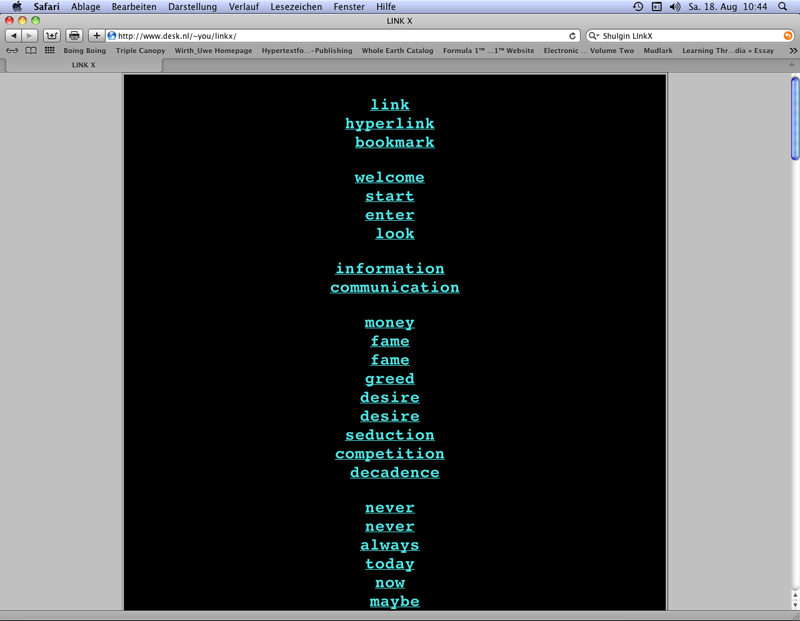

Shulgin, Alexei: Link X, 1996, web project (screenshot 2012).

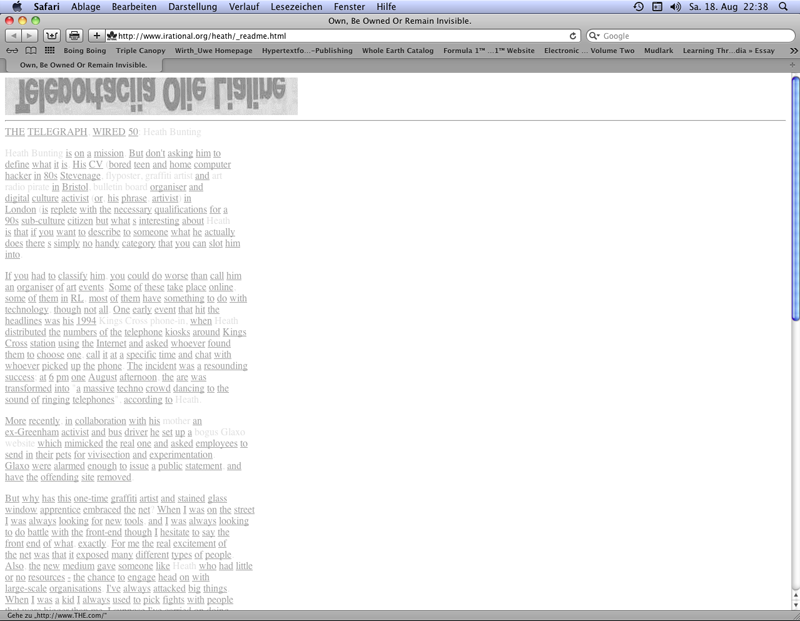

Alex Shulgin in "Link X" (1996) and Heath Bunting in "_readme – own, be owned, or remain invisible" (1998) selected words for the construction of URL addresses: The artists set "www." before the self chosen (Shulgin 26) or found words (Bunting 27) and added the top level domain ".com" used world wide for commercial sites. Contrary to Heath Bunting´s concentration on URL addresses ending with ".com" Shulgin changes between ".org" and ".com" and the resulting URL addresses lead in some cases to various websites. The words combined with links in the way described led in the time of the projects´ creations only seldom to documents meanwhile around 2000 unused URL addresses became rare. The words readable in the browser presentations deliver materials for the construction of links that can be used to explore the web as data space. In the early phase of the web this strategy was an interesting investigative attitude towards the arising data landscape.

Bunting, Heath: _readme – own, be owned, or remain invisible, 1998, web project (screenshot 2012).

URL addresses with the top-level domain .org are provided for organisations. Despite nonexisting restrictions this top-level domain is used mostly by organisations with charitable aims. The URL addresses of the top-level domain .com are reserved for the e-commerce. Among them are the websites of firms, often internationally operating corporations. If owners of websites occupied URL addresses similar to firm names then either they could receive money es a result of an out of court settlement for a voluntary cession, or they were faced with claims and lawsuits.

In 1999 eToys, the American shipment of toys, tried to force the Swiss artists´ group etoy in an out of court settlement to hand over their URL address etoy.com. After an interlocutory injunction the firm Network Solutions deleted etoy.com from the main register of URL addresses in December 1999. Network Solutions was responsible for the administration of .com addresses. Not only etoy´s website was not accessible any more but their mailbox, too. This sanction was not covered by the court decision.

After several negotiations without agreement the members of the Electronic Disturbance Theater and RTMark followed a strategy putting eToys under pressure at several levels until the management withdraw the lawsuit at the beginning of 2000.

During the Christmas season in 1999 virtual sit-ins were realised with the tool "FloodNet" to prevent sales on the website eToys.com for a short time. With the use of the software "FloodNet" developed by members of the group Electronic Disturbance Theater not the content of a website being the target is changed but the access is slowed down and blocked in extreme cases. A java applet runs reload calls: In three parallel frames a website is loaded in three-seconds-cycles. The server of a website is asked for a non-existent URL address and the "server error log" indicates its non-existence to web participants. Simultaneous FloodNet calls by many web participants can cause an overload of the "server error log". In these cases the accesses to targeted websites are blocked. 28

The virtual sit-ins were combined with a successful press campaign. Both together damaged the image of eToys. The share price decreased dramatically and in February 2001 eToys filed for bankruptcy protection after disappointing Christmas sales. The "ToyWar" demonstrates the appropriation of the data space by corporations. 29 Bunting´s textual instrument exploring the segmentation of the commercial data space anticipates the problems causing etoys´ self defence.

The examples of HTML Art presented above explore web fundamentals and lead the attention of web participants to HTML as a basic tool to create webpages. The possibilities to use the web must not be prefabricated by platforms such as social networks following frameworks mostly guided by commercial interests, and it is not necessary to use only these platforms. In the context of the web 1.0 e-commerce and the free exchange of informations were opposites, meanwhile in the web 2.0 platforms support and promote the exchange of informations between registered users because this boosts the profit of the platform owners: For advertisers and platform owners the users became game balls.

Contrary to that in the web 1.0 the net participants, while building their own websites and using tactical tools like "FloodNet", understood themselves as acting on their own behalf and according to their own benchmarks. If these actors wanted to resist restrictions then they organised campaigns and looked for participants. They used and use means resulting from the tactical possibilities offered by the free web distribution of informations and by activistic web tools being free of charge.

The examples presented in chapter VI.3.2 demonstrate the programming for browser presentations and the use of links as accesses to site-external webpages. The examples chosen for this chapter bring these two aspects together in their ways to thematise browser functions: They present not only browser functions (art for browsers) but also alternatives to popular web browsers (art as browser) being offered by Netscape and Microsoft (Internet Explorer).

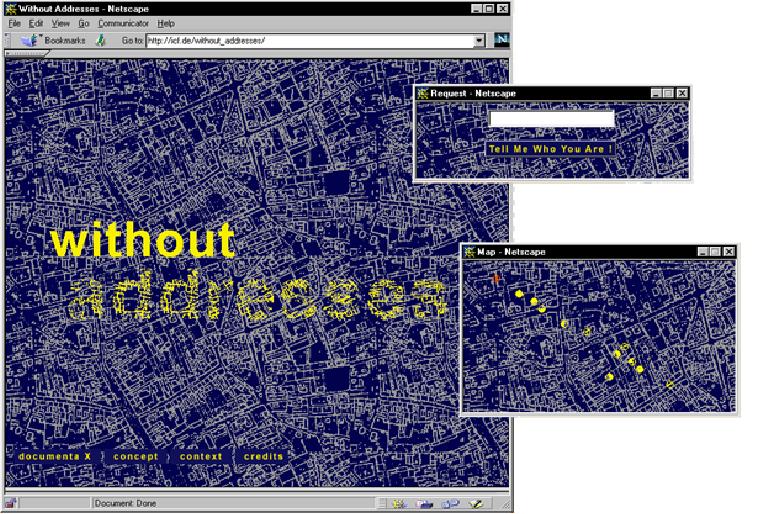

The Web is used as a resource for data accesses in "without addresses" (1997) by Joachim Blank and Karl-Heinz Jeron 30 as well as in Mark Napier´s "The Shredder" (1998). 31 These projects offer web participants possibilities to select accesses to documents stored on servers connected via the internet, but both projects do not offer ways to influence the modification of these webpages. Meanwhile Napier makes the input of an URL address possible, "without addresses" provokes web participants with the question "tell me who you are" to write entries. Then it uses these entries as keywords in search systems (Altavista und Yahoo), selects a webpage and constructs with it a new webpage. The selected URL address is noted on the transformed document. This document is stored in an archive.

Blank, Joachim/Jeron, Karl-Heinz: without addresses, 1997, web project (illustrations of the project documentation by Blank & Jeron).

Modified webpages are stored in "The Shredder´s" archive. In contrast to the access to these files in "without addresses" a blue-white map offers a controlled access to the archive´s recently stored and transformed webpages. If a web participant moves the cursor over the map (without street names) partitioned in fields then the line in a text box changes. This text line consists of an IP address of the web participant and his entries.

In "without addresses" the answers to the question "tell me who you are" are used to generate and store entries of the map fields´ virtual habitants. Mouse clicks on the map fields´ orange points open the files containing the informations on the virtual habitants. The files generated by an algorithm using the input of web participants contain the pseudo-identities of a fictive town´s inhabitants.

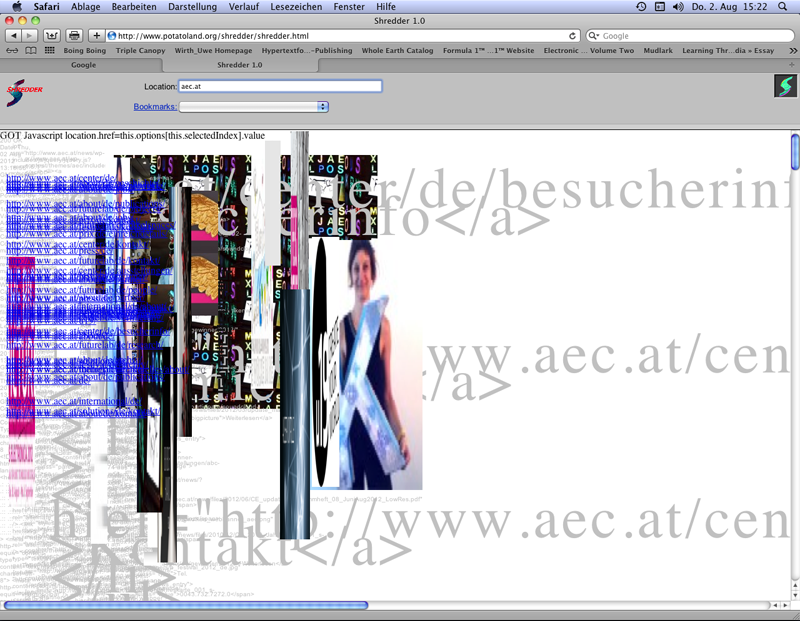

Napier, Mark: The Shredder, 1998, web project (screenshot 2012).

"Without addresses" and "The Shredder" transform the lay-out of the found webpages. In "without addresses" the text found via search systems and transformed in a digital handwritten-like font overlies a picture taken from the source document. From the webpages being called up by net participants in entering URL addresses "The Shredder" shows on the top left side of the transformed webpage the links included in the source document as lines overlapping each other. This column presenting links overlies the images shown distorted and overlapping: With the measures of length and width the proportions of the images are changed. In these distorting manner the illustrations are integrated into the transformed webpage. The source code is shown in little and overlapping letters on the left column. Fragments of the source code appear in big letters in a second column moved to the right. These letters lay over the letters of the left column.

"Without addresses" and "The Shredder" use arbitrary documents called up in the net as basic materials for the computing processes controlled by algorithms. The results of the computing processes on servers controlled by Perl partially still allow to reconstruct the orignal elements. The two projects by Blank & Jeron and Napier demonstrate the relation between the source code and the browser presentation as depending on a modifiable technical configuration. The following projects add to the browsers for presentations of webpages alternative browsers presenting aspects of the data traffic.

Projects modifying webpages on the server side like Napier´s "The Shredder" are labeled as "art browsers" and distinguished from "browser art" like Shulgin´s "Form Art" (see chap. VI.3.2). 32 But then it is impossible to designate the alternative browsers as "browser art". The most obvious and in the following chosen way out of the resulting terminology confusion is to designate only projects as "art browsers" making alternative browsers available for download: Projects by Blank & Jeron and Napier modifying presentations within available browsers are not categorised as "art browsers".

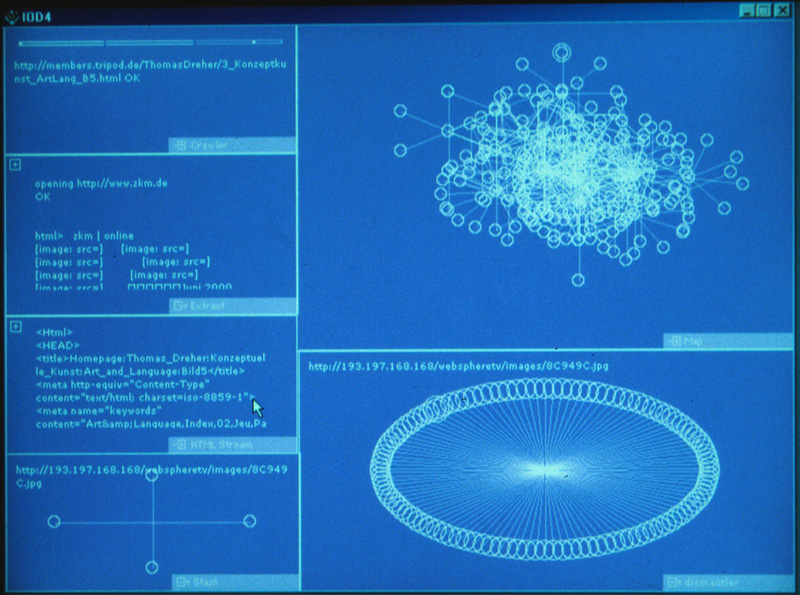

I/O/D: Web Stalker, 1997, browser (photo from the monitor, August 2000).

The art browsers "Web Stalker" by I/O/D (Matthew Fuller, Colin Green, Simon Pope, 1997) 33 and Maciej Wisniewski´s "Netomat" (1999) 34 thematise the data flow provoked by links and search systems. They addressed aspects not presented by the most used contemporary browsers (Internet Explorer, Netscape Communicator): the data traffic between servers initiated by the URL addresses in links. "Web Stalker" visualised the relations between linked webpages diagrammatically as an ongoing process capturing the documents from link to link via crawler, meanwhile in "Netomat" links of search systems were used to present the found files with their contents as a data stream of findings.

After the "Web Stalker" was downloaded and opened, a void, monochrome black or selectable purple or blue window appears. Users drag rectangles and correlate them with the functions described below. Each user cares for visual clarity in selecting the windows´ functions, sizes and locations.

After the input of an URL address "Web Stalker" starts to look for the links of this webpage, then follows the links of the linked webpages, and so forth. A diagram ("Map") visualises this link structure as an ongoing computing process. Webpages are represented as circles and the links as lines. With the growing amount of links the circles become brighter. The "crawler" shows the URL address it is dealing with actually. A scale visualises how much of a webpage´s source code the "Web Stalker" has investigated. "HTML stream" presents the source code as a part of the dataflow grasped by the crawler and directed by the links from document to document. The "Dismantler" enables users to draw circles out of other windows (drag and drop). The "Dismantler" preserves the link structure of an URL address, as it is presented in the diagram with circles and lines. Users can select via clicks on circles the URL addresses indicated at the upper side of "Dismantler´s" and "Stash´s" rectangles. If such a circle is dragged into "Extract" then a text is presented being the result of a readout of the source code and the computing processes initiated by this code. This text can be stored as .txt file. If circles are dragged into "Stash" then the URL addresses can be stored in a text file. These addresses can be copied and called up with an usual web browser.

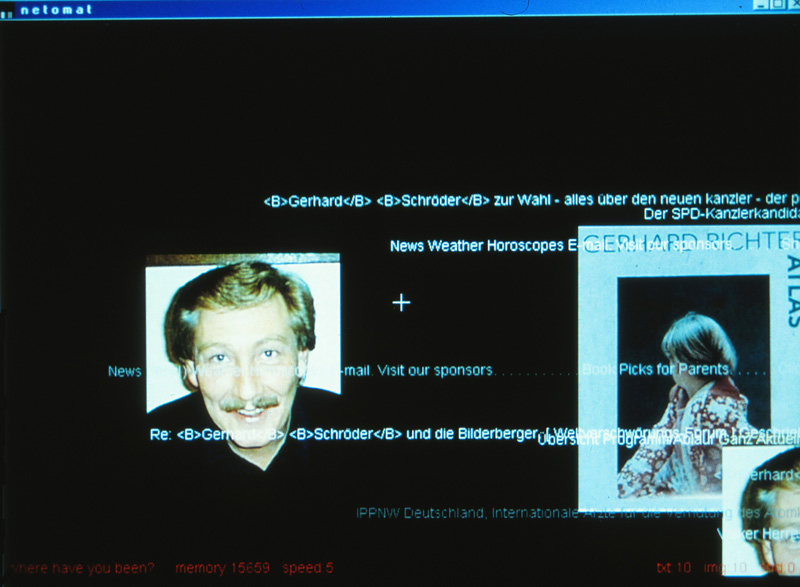

Wisniewski, Maciej: Netomat, 1999, browser (photo from the monitor, October 2000).

"Netomat" shows a data stream of images (ignored by the "Web Stalker") and text fragments. 35 If the "Netomat" is started after the activation of a web connection then the art browser begins its data access. In the browser window on the bottom right "Netomat" informs how many text, image and sound files are activated. The direction and speed of the data flow on the browser presentation can be modified with cursor movements. If the cursor position is directed from the centre to an edge then the presentation of the data stream is accelerated. The flow direction changes contrary to the cursor movements. A text input in the bottom line starts a new data stream after the Enter key is pressed. Because the memory function can´t be stopped the files indicated in elder data streams don´t disappear after the start of a new stream. Appearing text fragments can supply suggestions to further text inputs provoking the integration of new documents in the visualisation of memorised elements.

Wisniewski prevents directed data access. Text input causes surprise findings without enabling users to select elements out of the data stream and to recontextualise them: The browser surface presenting the data stream does not contain click functions.

"Netomat´s" use of documents found in the web dissolves the data constellations being defined by the source codes for the browser presentations of webpages: Texts are fragmented and pictures isolated. The "Netomat" substitutes the usual browser presentation of static webpages by the presentation of a data flow. This flow doesn´t loose its character to pass found web documents over to the user while he is tipping further text fragments: The surprising findings – the images and texts – can´t be substituted by results of a targeted search for specific topics.

Instead of the "Netomat´s" exploration of the content of webpages, the "Web Stalker" visualises the dial-up progressing from link to link: The computing processes for the connection buildup cause progressing diagram configurations.

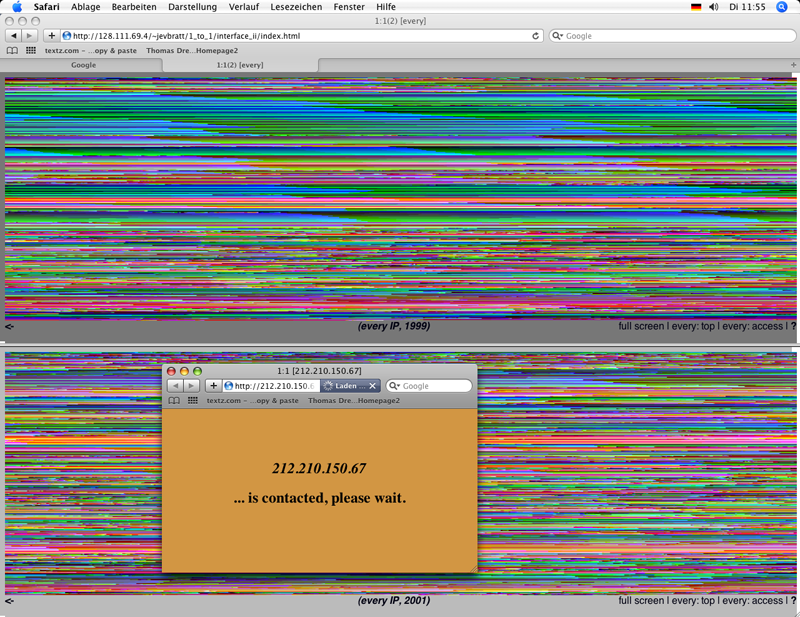

Jevbratt, Lisa: 1:1, every IP, 1999, 2001-2002, web project (screenshot 2009).

The web as an expanding archive of files linked to each other is tapped by the "Web Stalker" only partially starting with an URL address chosen by a web participant. Lisa Jevbratt´s 1:1 (1999, actualised in 2001-2) visualises the IP addresses of homepages found by crawlers. The overview demonstrates a Web 1.0 with an amount of websites that could seem to be not too big for a data visualisation of them all. Nevertheless in 1999 a crawler needed too much time to capture all available IP addresses of homepages. A crawler of the artists´ group C5, with Jevbratt as its member, gathered "two percent of the spectrum and 186,100 sites were included in the database." 36 In Jevbratt´s visualisations of the accessable homepages, for example in "every IP", the clarity of the visual arrangement and its combination with functionality (the links to the webpages) suffer from the mass of found IP addresses.

DNS servers translate the URL addresses of websites in IP addresses (see chap. VI.3.1). Jevbratt´s visualisations present the IP addresses of homepages as a dataspace with its own `geography´: The IP addresses with 10 numbers make it possible to define `distances´ – close and distant relations – between them.

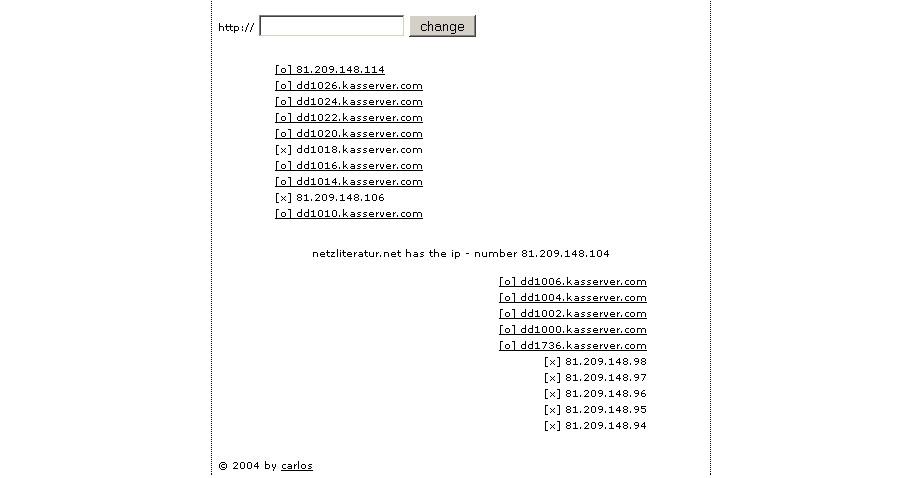

Katastrofsky, Carlos: Area Research, 2004, web project (screenshot 2007).

The projects "Neighbourhood Research" and "Area Research" (2004) by Carlos Katastrofsky (Michael Kargl) thematise the proximity or distance of IP addresses in searching for nearby IP addresses to the URL addresses inputted by web participants. In Katastrofsky´s projects the process of searching can be repeated by the input of further URL addresses, meanwhile Jevbratt visualises the results of two finished crawler actions (1999, 2001-2). The projects by Jevbratt and Katastrofsky complement the aspects of web data traffic shown by the art browsers "Web Stalker" and "Netomat".

The art browsers "Web Stalker" and "Netomat" are yielding for experimental more than for instrumentalising and target-oriented observation-manners. Aspects of the semantic web (as a vocabulary used by humans in speach acts and connected to semantic fields) are of primary importance in collaborative writing projects with databases as stores for contributions (see chap. VI.2.3), meanwhile the art browsers show technical procedures. The two levels of information in a technical and semantic context thematised in cybernetics (see chap. II) and information aesthetics (see chap. III) remain important aspects of a "problematic". 37

The relation between cybernetic models (see chap. II.2) and cybernetic sculptures (see chap. II.3) can be understood as a prefiguration of the relation between models of a net practice and net art: Just as the cybernetics´ concept of models defines a relation between theoretical statements and a built model (model level 1) and demonstrates with it possibilities to artists how they can install machining processes gaining the status of models as exemplary realisations (models level 2), so the net art tries to realise a net practice being exemplary in a non-commercial information context as net activists defended it against hazards: The free information exchange in a deterritorialised data world becomes a model (model level 1).

In the web the term "art" does not signify a status declared by institutions and defined within discourses but a provision of models being technically successful as well as an offer for the observation of net conditions: They are models for an exemplary net practice (model level 2). Net activists feel themselves obliged to react to critical observations of net conditions in demonstrating who how and with which interests determines these conditions or tries to change them. This causes net art to demonstrate the consequences of the confrontations of interests and power structures.

Collaborative writing projects (see chap. VI.2.3) and alternative browsers (see chap. VI.3.3) offer web practices provoking net observations (as reflexions). Either the daily routines of web participants calling up prepared unchangeable contents are questioned by models of participation, or the preconditions are created for critical observations of the net conditions being basic for the quotidian supply of documents.

In the context of the experimental video culture in the seventies Dan Sandin and Phil Morton extended the "Analog Image Processor" to an open platform for developers and provided with the "Copy-It-Right-Licence" an early example for Open Source and Open Content (see chap. IV.1). This open form to distribute products integrated artists of the demoscene in the eighties into their common ways to develop the programming of personal computers (see chap. IV.2.1.4.3) and to use later the internet´s possibilities for a no-cost distribution of their animation codes.

In comparison to elder media the web facilitates works-in-progress for the development of software (Open Source) or for the construction of knowledge systems (Open Content) meanwhile commercial oriented producers try to establish closed systems in the form of scarce and costly final products. On the one hand the barrier between producer and consumer vanishes in the gift economy, on the other hand this barrier is uphold by the distributors and salesmen. One of the effects of the web is a wider gap between the open source model with an unlimited distribution and a cooperative production on the one hand and, on the other hand, the commercial distribution models now augmented by e-commerce with a digital rights management based on software for copy-restriction mechanisms to be installed on the computers of the customers.

Since 1999 the relations between Open Source, Open Content and new distribution models were discussed on four Oekonux conferences. Richard Stallman, Eric Steven Raymond, Richard Barbrook, John Perry Barlow and Lawrence Lessig became in the eighties and nineties the most famous net activists writing on Open Source and Open Content.

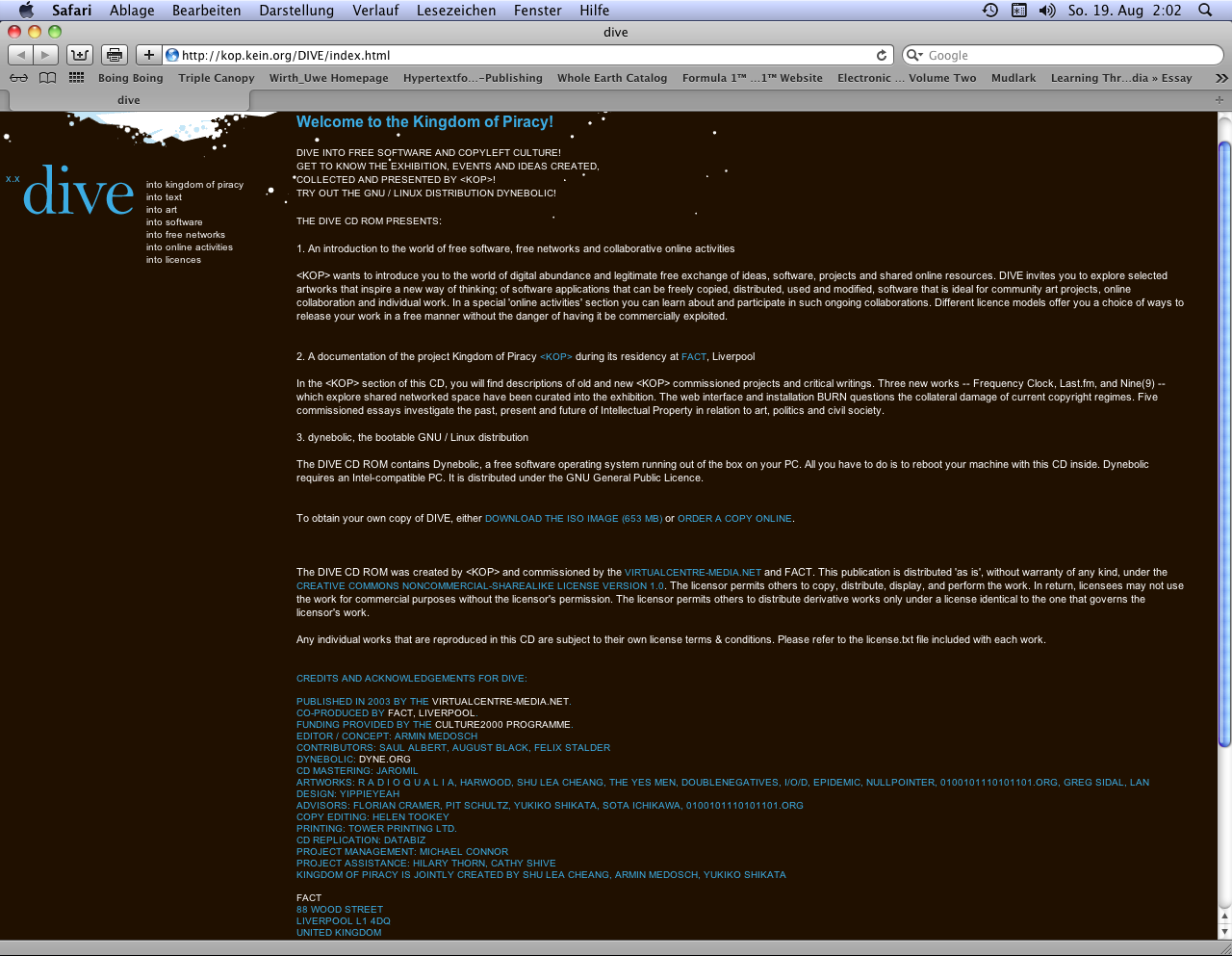

In net activism restrictions for further developments of software and its distribution by copyright and patent laws were and are discussed as barriers blocking a free exchange of data and a cooperative development of software. This activism fights against economic, juridical and technical obstacles restricting a free data exchange. Platforms like "Illegal Art" (2002-6, now only parts of the original web contents are stored in the Internet Archive: sound, video) and "Kingdom of Piracy" (2002-6) show how artists thematise basic problems of web usage and their working conditions restricted by copyright and patent laws. The technical, economic and legal conditions for the accesses to data as well as for the downloads, modifications and distributions of files constitute an important part of net art´s context. If projects of net artists show web conditions in an exemplary manner and demonstrate the tensions between technical possibilities and restrictions by proprietary practices then the projects become either a part of net activism (Negativland/Tom Maloney, see below) or they transgress – for example by the provision of tools (model level 2) – the limits of art towards activism (The Yes Men, see below).

Medosch. Armin (Hg.): DIVE: An Introduction into the World of Free Software and Copyleft Culture, FACT in Liverpool, 2003, web plattform (screenshot 2012).

The comprehensive project "DIVE: An Introduction into the World of Free Software and Copyleft Culture" was integrated into the platform "Kingdom of Piracy". "DIVE" focuses on relations between software development and a free distribution (Open Source) without the restriction practices supported by copyright and patent laws. With "DIVE" "Kingdom of Piracy" became in 2003 the most comprehensive and most concise platform for relations between free software, net activism and net art.

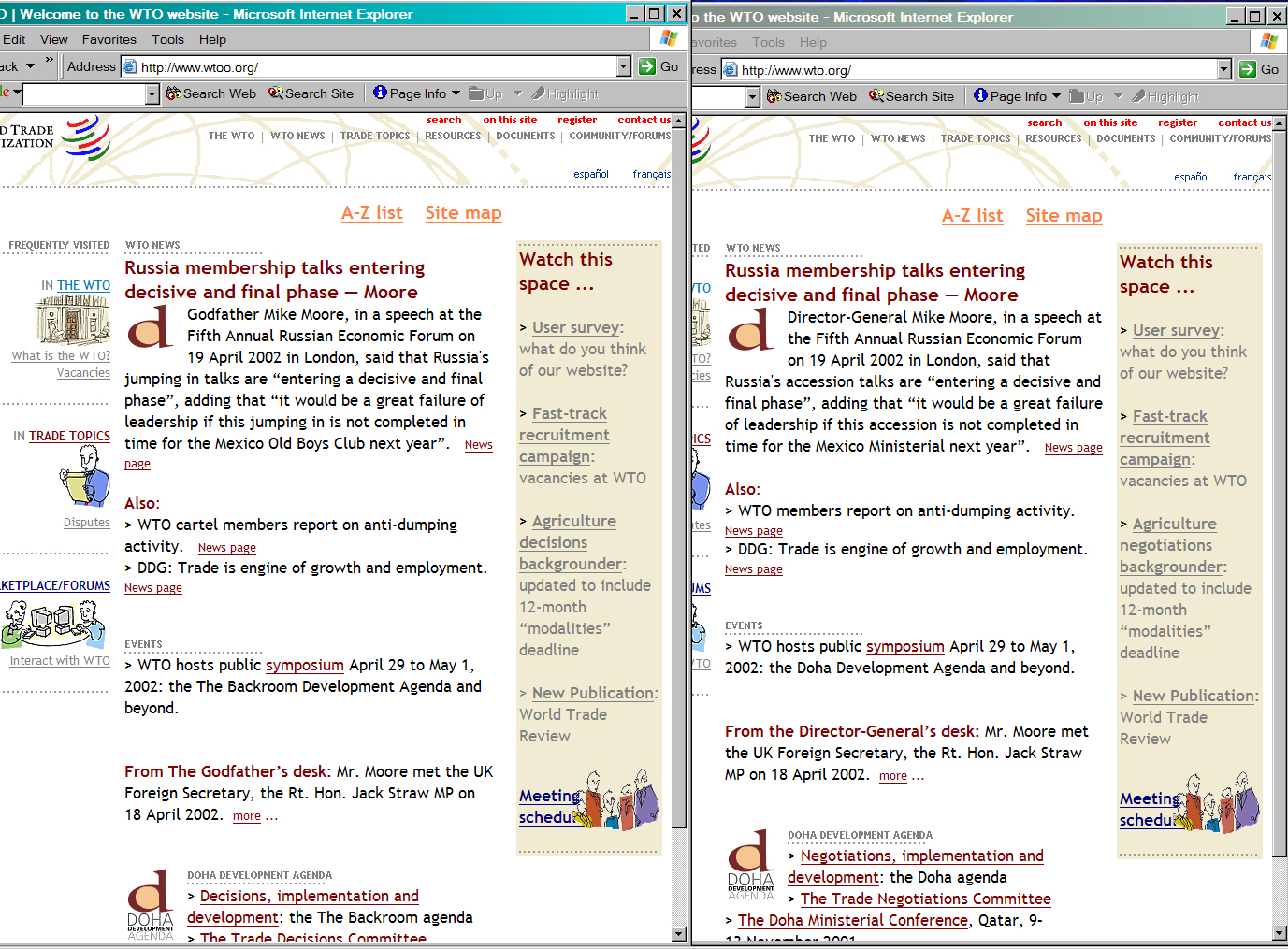

The Yes Men/Detritus/Doll, Cue P.: Reamweaver Version 2.0, tool, 2002. Screenshot of the creation of a pseudo-mirror site of the World Trade Organization´s website.

One of the activistic projects of the platform was "The Yes Men´s Reamweaver". In 2002 Gladwin Muraroa of The Yes Men, Nickie Halflinger of Detritus and Cue P. Doll (Amy Alexander) developed "Reamweaver Version 2.0" with Perl. If the tool for automated modifications of sites was installed via FTP access on a server than it enabled web participants to create parodying pseudo-mirror sites (They seem to be a `mirror´ or the copy of a site with another URL address but with their modifications they comment the copied sites). "Reamweaver" was launched by RTMark and supported by interested web participants. 38 Fakes of the World Trade Organization´s (WTO) site are examples of the tool´s uses. 39 When critical pseudo-mirror sites are censored then "Reamweaver" enables web participants to create in a short time-span new counterfeits with further critical and parodying statements.

First page of a two-page invitation of the Media Tank to "Illegal Art Extravaganza", the special events to the travel exhibition "Illegal Art: Freedom of Expression in the Corporate Age", Old City´s Nexus Gallery, Philadelphia 2003.

Carrie McLaren, editor of the "Stay Free Magazine", curated the travel exhibition "Illegal Art: Freedom of Expression in the Corporate Age". The exhibition and its site presented many examples from art, film and music showing repetitions and modifications of copyright protected sources. Legal protection was provided by the Chilling Effects Clearinghouse. In this association the Electronic Frontier Foundation and the law schools of five American universities collaborated (Berkman Center for Internet & Society/Harvard University, Stanford Center for Internet & Society, Samuelson Law/Technology and Public Policy Clinic, University of California, University of San Francisco Law School, University of Maine School of Law).

The copyright does not protect authors against the exploiters of their rights. Rather the copyright is used by exploiters as a means to establish connections between the exploit of rights and jurisdiction in a strategically calculated manner disempowering authors (Links to the webpage "Copyright Articles" connected to texts about abuses by the copyright industry).

The website of the exhibition presented film extracts, animations, musical works and artworks in different media – partially with their history of jurisdiction: Some lawsuits were not completed by a judgement in the time of the travel exhibition´s presentations. The complaints ("cease-and-desist-orders") of the copyrights´ owners and exploiters disregard often "Fair Use" (see below), nevertheless the defendents frequently relent before a lawsuit starts because these lawsuits last long and the financial expenses are high.

The curator´s intention was to present to a broad public the misuse of the copyright as a restraint of artistic creativity instead of its protection 40 and to disturb the copyright industry´s lobbying and accusatorial practice. The exhibition offered to authors of newspaper reports an occasion to discuss the perversion of the copyright into a Corporate Right. 41 Beside the San Francisco Museum of Art no other museum with a wider collection of 20th century art exhibited "Illegal Art", even though they are affected by the effects of an accusatorial practice disregarding "Fair Use": Neither Marcel Duchamp´s L.H.O.O.Q. (1919) nor Pop Art could be created under contemporary legal relationships.

The copyright industry stigmatises takeovers of some parts of an art work protected by copyright as piracy, as intellectual property theft. "Illegal Art" exhibits examples of the ways artists use procedures to copy and quote with – mostly ironic – defamiliarizations or alienations. This "recombinant theater" 42 parodies and comments the contemporary mass culture by its manners to pick specific objects up. The technical possibilities of precise digital copies without losses in quality are used in procedures of appropriation and modification to articulate criticism of the mass media´s spectacle organisation. Procedures of quotation, plagiarism and transformation are used for an unveiling, exaggerating or alienating criticism of economic and social conditions; takeovers "for purposes such as criticism, comment..." permits the "Fair Use Doctrine" of the US law. 43 Entertaining modes of recycling and activistic-critical recombining strategies are (combinable) takeover practices to intervene in strategies of the copyright industry (corporations and their lawyers) to control the use and distribution of the mass culture´s signs. On this point we are faced with artistic and activist (re-)appropriations.

The website of "Illegal Art" itself was an example for the procedures of a "communication guerilla" 44 using strategies of (re-)appropriation ironically: When the homepage was opened then a window started presenting the following text: "ELECTRONIC END USER LICENSE AGREEMENT FOR VIEWING ILLEGAL ART EXHIBIT WEBSITE AND FOR USE OF LUMBER AND/OR PET OWNERSHIP". As soon as a reader of this parody of a license clicked on "I agree" then the contract and the homepage disappeared.

"Illegal Art" was conceived as a plea for an extension of the "Fair Use Doctrine´s" applicability. This extension is a conclusion drawn from the practices of the (re-)appropriation culture. Negativland outlined applications of the "Fair Use Doctrine" being adequate from their point of view:

...we would have the protections and payments to artists and their administrators restricted to the straight-across usage of entire works by others, or for any form of usage at all by commercial advertisers. Beyond that, creators would be free to incorporate fragments from the creations of others into their own work. 45

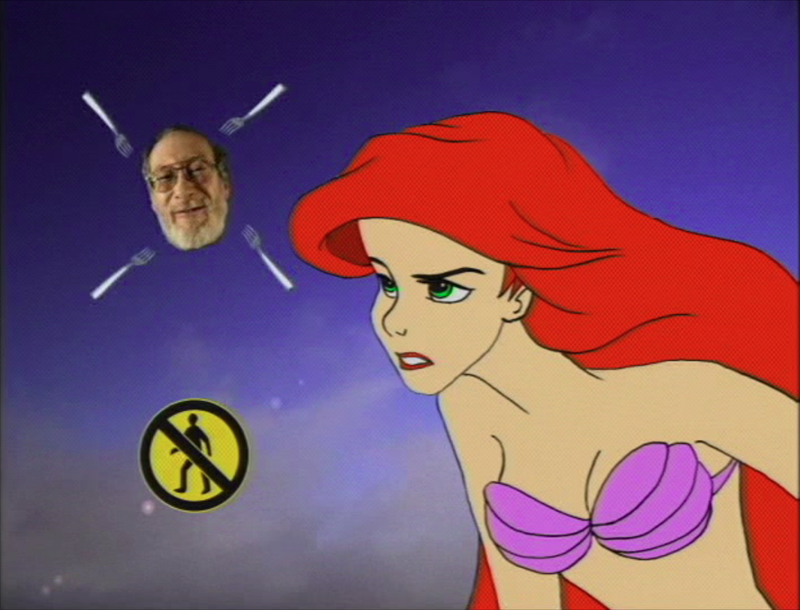

Negativland/Maloney, Tim: Gimme the Mermaid, film, 2000/2002.

Negativland and the former Disney film animator Tim Maloney assembled different sources in creating Gimme the Mermaid (2000/2002, an exhibit of "Illegal Art") as a comment on the behaviours of owners and administrators of copyrights. Copyrights protect properties and property is an important part in an economic-based power structure: Copyrights save property and property is power. A telephone voice of a lawyer for the music industry was visualised as the speech of the mermaid Arielle (the figure was a part of a Disney production) and was set to music in creating a cover version of Black Flag´s Gimme Gimme Gimme: "I own it or I control it...You can´t use it without my permission." The decision on the appropriation of a copyright protected "it" is not taken by the critic but by the criticized person: That´s the situation the "Fair Use Doctrine" should prevent. The barriers for the downloads and further processing created by one-sided interpretations of the copyrights and the "Digital Millenium Copyright Act" (DMCA) 46 threaten the net architecture created for free access.

Art forms and their distribution in legal, economic and media contexts determine each other. Because the production of art can´t be separated from production conditions as artists thematise them via critical self-embedding. If Tim Maloney shows the strategies of copyright administrators with the means the administrators tried to prevent then he needs a good defender. In "Illegal Art" the practice to bundle activist efforts is organised as legal assistance for artists creating test cases for legal proceedings. After the verdicts for or against the works featured in "Illegal Art" it is possible in comparable cases to anticipate future verdicts.

Dr. Thomas Dreher

Schwanthalerstr. 158

D-80339 München

Germany.

Homepage with numerous articles

on art history since the sixties, a. o. on Concept Art and Intermedia

Art.

Copyright © (as defined in Creative

Commons Attribution-NoDerivs-NonCommercial 1.0) by the author, August

2012 and March 2014 (German version)/March 2014 (English version).

This work may be copied in noncommercial contexts if proper credit is

given to the author and IASL online.

For other permission, please contact IASL

online.

Do you want to send us your opinion or a tip? Then send us an e-mail.

Annotations

1 Arns: Netzkulturen 2002, p.21s.; Berners-Lee: Web

1999, S.69,72ss.,79,97ss; Gere: Culture 2008, p.152s.; Warnke: Theorien

2011, p.49,52; Weiß: Netzkunst 2009, p.30s.

On the growth of the amount of users from 1995 to 2002 and the number

of websites from 1993 to 2002: Matis: Wundermaschine 2002, p.312s.; Warnke:

Theorien 2011, p.48s. Cf. Hyperakt/Vizzuality/Google Chrome Team: Evolution

(2012): In 2011 2.27 milliard participants used the web. back

2 Berners-Lee/Cailliau: WorldWideWeb 1990. back

3 Berners-Lee: Web 1999, p.33-37. back

4 Berners-Lee: Web 1999, p.28-31,38-45. back

5 Browser "WorldWideWeb": Berners-Lee: Web

1999, p.45s.; Berners-Lee: WorldWideWeb Browser o.J.; Matis: Wundermaschine

2002, p.311; Wikipedia: WorldWideWeb 2012.

Other browsers: Berners-Lee: Web 1999, p.56s.,64,67; Matis: Wundermaschine

2002, p.315s. back

6 FTP: Warnke: Theorien 2011, p.36.

TCP/IP: Plate: Grundlagen 2012, chap.

11: TCP/IP; Warnke: Theorien 2011, p.43-46,51. back

7 Bunz: Speicher 2009, p.100-106; Kahnwald: Netzkunst 2006, p.49s.; Plate: Grundlagen 2012, chap. 11: TCP/IP; Weiß: Netzkunst 2009, p.37ss.,41s. back

8 HTTP V 1.0: Request for Comments/RFC 1945. Warnke: Theorien 2011, p.86-90. back

9 Since 2002 defined in RFC 3305. Precursor: RFC 1630, 1994 and others. Executing scientists: W3 Consortium/IETF (Berners-Lee: Web 1999, p.36s.,39s.). back

10 Warnke: Theorien 2011, p.64; Weiß: Netzkunst 2009, p.36. back

11 Postel: Domain 1983 (RFC

881); Warnke: Theorien 2011, p.62-76; Weiß: Netzkunst 2009,

p.33-36.

Censorship with DNS filter, an example: Dreher: Link 2002-2006, chap.

ODEM.

back

12 Berners-Lee: Web 1999, p.41s.; Palmer: History undated; WWW Consortium: HTML 1992. back

13 Berners-Lee/Connolly: Hypertext 1995 (RFC 1866). back

14 Berners-Lee: Web 1999, p.42 (quotation),41-44. back

15 XHTML 1.0, January 2000, reformulation of HTML 4.01: WWW Consortium: XHTML 2000/2002. back

16 Warnke: Theorien 2011, p.96s.; Weiß: Netzkunst

2009, p.50.

back

17 Berners-Lee: Web 1999, p.42. back

18 "Dictatorship of the beautiful appearance"/"Diktatur

des schönen Scheins": Stephenson: Diktatur 2002 (German title

of Stephenson: Beginning 1999).

In a lecture Holger Friese demonstrated the recognizable traces of the

"Großes Data Becker Homepage Paket": "Das

kleine Homepagepaket", shift e.V., Berlin, 1/23/1999 (Dreher:

Unendlich 2001). back

19 Friese: Selection 2008, p.24s. back

20 Friese: Artworks 2008.

Lit.: Dreher: Unendlich 2001; Rinagl/Thalmair/Dreher: Monochromacity 2011;

Vannucchi: Friese 1999. back

21 Berry: Thematics 2001, p.84s.; Cramer: Discordia 2002, p.71,75,78; Cramer: Statements 2011, p.235ss.,243; Greene: Internet 2004, p.40s.; Kerscher: Bild 1999, p.110. back

22 Lialina and a filmmaker at Moscow´s film club "CinePhantom". Further pictures are based on stills "from the Hollywood film `Broken Arrow´" (Baumgärtel: [net.art] 1999, p.129). back

23 Simanowski: Hypertext 2001, part 4; Simanowski: Interfictions 2002, p.95s. back

24 Compare the opposite explanations of the relation frame – narration by Julian Stallabrass and Roberto Simanowski: According to Stallabrass the observers click "through screens without orientation" (Stallabrass: Internet 2003, p.58s.), meanwhile according to Simanowski the "reading process follows the [narrative] linearity fairly close" (Simanowski: Interfictions 2002, p.93. Cf. Berry: Thematics 2001, p.80ss.; Manovich: Language 2001, p.324s.). back

25 With scripts developed by Laszlo Valko. Lit.: Greene: Internet 2004, p.80s. with ill.54; Weiß: Netzkunst 2009, p.203-235, 375ss. back

26 Greene: Internet 2004, p.42s. with ill.23. back

27 Heath Bunting repeats the text of the following newspaper article: Flint, James: The Power of Disbelief. In: The Daily Telegraph, 4/8/1997 (on Heath Bunting). Lit.: Arns: Netzkulturen 2002, p.67s.; Arns: Readme 2006; Berry: Thematics 2001, p.196-199; Greene: Internet 2004, p.42-45; Heibach: Literatur 2003, p.110s.; Stallabrass: Internet 2003, p.29s. back

28 Dreher: Radical Software 2004, chap. Electronic Disturbance: Tools, Sites & Strategien. back

29 "Toywar": Arns: Netzkulturen 2002, p.62-65; Arns: Toy 2002, p.56-59; Drühl: Künstler 2006, p.283-293; Greene: Internet 2004, p.125ss.; Grether: Etoy 2000; Gürler: Strategien 2001, chap. Toywar; Paul: Art 2003, p.208s.; Richard: Anfang 2001, p.213-223; Richard: Business 2000; Stallabrass: Internet 2003, p.96-101; Weiß: Netzkunst 2009, p.199,254-265; Wishart/Bochsler: Reality 2002. back

30 "Without addresses" is no longer stored on a web server. It was "programmed with Perl and Postscript. The resulting Postscript file was rendered to a GIF file in using pbmplus." (Karl-Heinz Jeron, e-Mail 8/15/2012, in German) Lit.: Blase: Street 1997; Dreher: Stadt 2000, chap. without addresses; Gohlke: Go o.J.; Huber: Browser 1998, chap. 4.1 Joachim Blank/Karl-Heinz Jeron: without addresses. back

31 "The Shredder" was programmed in Perl and Javascript. Lit.: Greene: Internet 2004, p.99ss.; Kahnwald: Netzkunst 2006, p.18s.; Napier: Shredder 1999; Napier: Shredder 2001; Simanowski: Interfictions 2002, p.151,161; Stallabrass: Internet 2003, p.47. back

32 Kahnwald: Netzkunst 2006, p.7-11. Cf. Galloway:

Browser 1998; Simanowski: Interfictions 2002, p.165,151 (Simanowski labels

"The Shredder" and I/O/D´s "Web Stalker" presented

below as "art browsers"); Maciej Wisniewski in Hadler: Informationschoreographie

undated: "The `Netomat´ is no longer a browser art but rather

an art browser."

Overviews on alternative browsers were offered by the Browserdays

being organised in different cities (Amsterdam, Berlin, New York) and

in 2001 at the "Browsercheck"

presented in Berlin at "raum 3" "under everyday conditions".

back

33 "Web Stalker" was developed in Lingo, the programming language for Macromedia Director. Lit.: Baumgärtel: Browserkunst 1999, p.88,90; Baumgärtel: [net.art] 1999, p.152-157; Dreher: Politics 2001, chap. I/O/D: Web Stalker; Fuller: Means 1998; Gohlke: Software 2003, p.58s.; Greene: Internet 2004, p.78,84-87; Heibach: Literatur 2003, p.213s.; Kahnwald: Netzkunst 2006, p.16ss.; Manovich: Language 2001, p.76; Paul: Art 2003, p.118s.; Simanowski: Interfictions 2002, p.165s.; Stallabrass: Internet 2003, p.21,23,39,55,126; Weibel/Druckrey: net_condition 2001, p.276s.; Weiß: Netzkunst 2009, p.235-242. back

34 Dreher: Informationschoreografie 2000, chap. Netomat; Fourmentraux: Art 2005, p.86s.; Greene: Internet 2004, p.131; Heibach: Literatur 2003, p.214; Kahnwald: Netzkunst 2006, p.21s.,40s.; Manovich: Language 2001, p.31,76; Stallabrass: Internet 2003, p.126; Weibel/Druckrey: net_condition 2001, p.80s. back

35 In 2000 the author could not call up the sound files on Windows 98. According to Wisniewski it was possible to call the sound files with fast connections, meanwhile low connections required to deactivate the sound function. Wisniewski offers not any more the download of "Netomat" on netomat.net. Also the documentation offered by Wisniewski on this site is no longer available. back

36 Jevbratt: 1:1 2002. Cf. Jevbratt: Infome 2003, chap.

abstract reality: "1:1 was originally created in 1999 and it consisted

of a database that would eventually contain the addresses of every Web

site in the world and interfaces through which to view and use the database.

Crawlers were sent out on the Web to determine whether there was a Web

site at a specific numerical address. If a site existed, whether it was

accessible to the public or not, the address was stored in the database.

The crawlers didn't start on the first IP address going to the last; instead

they searched selected samples of all the IP numbers, slowly zooming in

on the numerical spectrum. Because of the interlaced nature of the search,

the database could in itself at any given point be considered a snapshot

or portrait of the Web, revealing not a slice but an image of the Web,

with increasing resolution."

Lit.: Baumgärtel: [net.art 2.0] 2001, p.192-197; Munster: Media 2006,

p.82ss.; Paul: Art 2003, p.181s. back

37 «Problématique»/"problematic":

Althusser: Marx 1969, p.32,34ss.; Art & LanguageNY: Blurting 1973,

p.68, Nr.282.

The data visualisation "Small

Talk" (2009) by Use All Five, Inc. maps a social network ("Twitter")

according to semantic critieria. back

38 RTMark: Reamweaver 2002: "The Reamweaver software...allows users to instantly `funhouse-mirror´ anyone's website in real time, while changing any words that they choose." back

39 From http://www.wto.org to http://www.gatt.org (8/5/2012) and to http://www.wtoo.org/ (12/14/2003, not found any more in 8/5/2012. Screenshot of the creation with "Reamweaver" in the NETescopio database: URL: http://netescopio.meiac.es/ proyecto/ 0220/ reamweaver_samples/ wtocompare.jpg (3/9/2014)). back

40 Heins: Progress 2003. back

41 F.e. Dawson: Art 2003; Lotozo 2003; Nelson: Exhibition 2003. back

42 Critical Art Ensemble: Disturbance 1994, Chapter 4. back

43 Without author: United States Code undated (Title 17: Copyrights, Chapter 1, Section 107). back

44 Autonome a.f.r.i.k.a. gruppe/Blissett, Luther/Brünzels, Sonja: Handbuch 1997/2001. back

45 Negativland: Fair Use undated. back

46 Without author: U.S. Copyright 1998. back

[ Table of Contents | Bibliography | Next Chapter ]

[ Top | Index NetArt | NetArt Theory | Home ]